You find yourself in the situation of developing a new mobile app, and are wondering whether it should run on an Apple or Android device. Consider this: World-wide active user data has placed the number of Apple users at 1 billion, while Android users top the 2 billion mark. Together these two platforms accounted for 99.6% of all new smartphones sales in the fourth quarter of 2016. So unless you have a particular reason why you can standardize on just one platform (if, for example, you’re the one providing the devices to your users) you will likely also choose to support both. If you’re lucky enough to have enough resources to develop both at once, good for you. But if you’re constrained, and have to do one at a time, which comes first?

A quick Google search reveals countless business and technical analyses on this topic, along with lots of personal preferences. How do you make your case for which platform to support first? Many companies use market share and demographic information about their user base to make that decision. It’s a good place to start, but there are additional angles to consider. Here are some of the important ones:

Look at market share, but also consider ease of development

According to CIRP’s research, Android accounted for 67% of US phone activations from April—June 2017, up from 63% in the same quarter last year. iOS activations dropped from 32% to 31% in that stretch. So on the grand scale, the market seems to indicate Android. But is the same thing true for your market? Perhaps your customer/user demographics are different, and have different preferences. If you’re going to try to use market data to drive your choice, then you need to make sure you use the right data. I can’t help much with that, because only you know your own customers.

So, if the market data is not clear, then who do you bet your application development money on? Let’s look at how development issues might inform this choice.

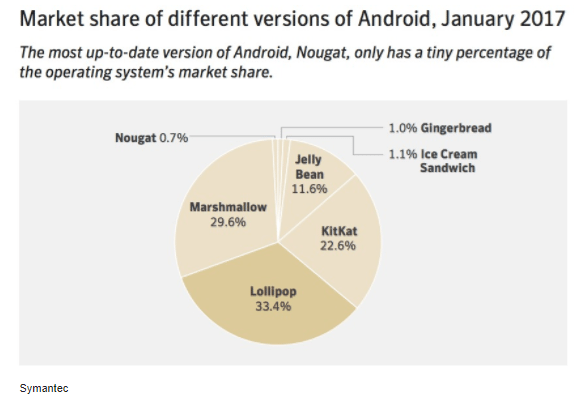

In a previous article, I discussed the differences in iOS and Android platforms from a development perspective. One big consideration is the single-version aspect of the iPhone versus the numerous Android versions. Apple has traditionally been able to maintain an impressively high adoption rate for iOS updates, so the audience of iOS users is fairly uniform (basically screen size is the only differentiator). For Android, not so much—the graphic below is a telling illustration of the complexities of keeping current with the different Android OS versions (which are all delightfully named for candies and other sweets):

This—along with the wide variety of brands and devices on the Android platform, many with brand-specific flavors—is a much bigger burden on developing and testing an application than the near-monoculture of iOS. It takes an enormous amount of coding and testing, not to mention the myriad devices you need to purchase to effectively test your application.

Based solely on ease of development and intricacies of testing, Apple would win the which-comes-first argument. And in the context of supporting both platforms, which you will likely do, attacking the easier one first has its benefits—the lessons you learn from that will make the second platform go more smoothly.

Are you thinking about security?

Even though the premise of this article is that you will likely support both platforms—so this is not not necessarily a selection criteria for which comes first—it never hurts to talk about security. If security is important to you (and it should be) you’ll need to consider the platforms from a vulnerability perspective.

The fact that Android is open source, which means manufacturers of applications and smartphones can modify the OS as they see fit, increases the potential for vulnerabilities. Google is trying to address this by managing its source code with Android compatibility testing, and the latest Android Oreo version (8.0, released in May of 2017) is touted as being “more secure.” Many threats to the Android OS could be largely eliminated if all users simply upgraded their devices to the latest version. But, as illustrated above, this is not a common practice among Android users.

Typically, Android users purchase equipment through a third-party device manufacturer (Samsung, HTC, and too many others to name), not directly from Google, and so they have to rely on both them and their wireless service provider to release a compatible software update for their specific device. It’s common for, say, Verizon and AT&T’s Samsung offerings to have different levels of software updates as a result. Apple, on the other hand, controls both the device manufacturing and iOS software development, which lets them control their process tightly, and release software updates for all of their devices simultaneously.

Because Apple has a high upgrade rate, and is in control of both the hardware and software, they can impose tighter security. They control which apps are available on their App Store, vetting all apps to avoid passing malware through to the consumer. They also occasionally check up on the apps, and if found to contain malware, shut them down. But they can’t catch all malware, and the iOS platform still has its fair share of vulnerability issues.

Needless to say, security risks on either platform exist and need to be managed.

Who controls the device?

We spoke earlier about how your market demographics might influence your choice of platform, which assumed that you’re building an app for public consumption. That’s not always that case; many enterprises build mobile apps for their own internal uses.

In many cases those apps are meant to be used on the staff’s own personal devices—something known as “bring your own device,” or BYOD. Many enterprises have learned that you can’t tell your people what to use even if you offer to supply the device. In that case your choice is similar to the consumer case: you can start with the most prevalent platform, knowing that you will eventually have to support the other, and plan for this up front.

If your business is such that you are providing the device together with the application, your life is a lot easier because you can choose the one you want to support. There are many factors that influence your choice, including:

- Device cost is probably the most important to you. The Android market has a wide range of solutions, from inexpensive to pricey high-end units. Apple famously lives only at the costly end of that spectrum. If you’re deploying apps into a rough environment—equipping your truck drivers, for example—you might choose the cheapest possible Android as a nearly “disposable” solution, or you might opt for a more reliable (and costly) device together with a high-impact case for protection. It depends on how disruptive the loss or damage of a device is to your business. It helps to remember, though, that the cheaper devices tend to come from second-tier manufacturers, and often present challenges to the software developers because of poor implementations of the Android operating system.

- User acceptance matters internally, just as it does in the marketplace. Do your internal users oppose being told what device to use? You may be surprised at the reluctance (and resistance) you will encounter if you insist that, say, an iPhone user use an Android phone to run an application. If the application is an internal one, such as a point-of-sale device, users won’t mind leaving it behind when not working. But in a situation where they need to use the app out on the road, users most likely will not want to carry two devices.

That brings us to the next consideration.

Why not both at once? Be prepared to staff accordingly

The theme of this discussion has been “which platform first” because we’re used to clients wanting to keep their staff size small. But if your market demands simultaneous release on both platforms, that can be done if you are willing to plan and staff your team for keeping both versions moving forward together. This is actually the ideal way to do it: one team focuses on the code that is common to both platforms, then an iOS team and an Android team each focus on their specific platform. This avoids the “leapfrog” approach, where you move back and forth between the two platforms, with the latest version of one platform lagging behind the latest version of the other. Either case is a legitimate—strategy, but you need to plan accordingly to ensure that you are appropriately staffed for whichever approach you choose.

Choose the right tools

This article isn’t meant to be about the actual development technologies that you’ll use to build the app, but it bears mentioning because it affects your plans—especially your staffing.

Traditionally, Android apps are built in Java (an industry-wide language) and iOS apps are built in either Objective-C or Swift (two languages both supported by Apple). To support both platforms in this way you’ll need staff conversant in both of those technologies.

There is a third option from Microsoft, called Xamarin, that is designed to simplify this process (if you’re not familiar with it, check it out here.) It handles both platforms equally well using a single programming language, C#. In a well-structured project, much of the code can be shared between both the iOS and Android applications. It makes life easier for your developers because it’s one programming language for both versions. All of this decreases the time to market and simplifies the development process, and makes the “which platform?” decision a lot easier.